This work presents a graph neural network (GNN) framework for solving the maximum independent set (MIS) problem, inspired by dynamic programming (DP). Specifically, given a graph, we propose a DP-like recursive algorithm based on GNNs that firstly constructs two smaller sub-graphs, predicts the one with the larger MIS, and then uses it in the next recursive call. To train our algorithm, we require annotated comparisons of different graphs concerning their MIS size. Annotating the comparisons with the output of our algorithm leads to a self-training process that results in more accurate self-annotation of the comparisons and vice versa. We provide numerical evidence showing the superiority of our method vs prior methods in multiple synthetic and real-world datasets.

The Maximum Independent Set (MIS) problem is a well-known challenge in graph theory and combinatorial optimization. This paper presents an approach for MIS by using the idea of Dynamic Programming and self-supervised learning. Solutions to this problem are vital for applications in network theory, scheduling problems, and resource allocation.

MIS Algorithms Induced by Graph Comparing Functions

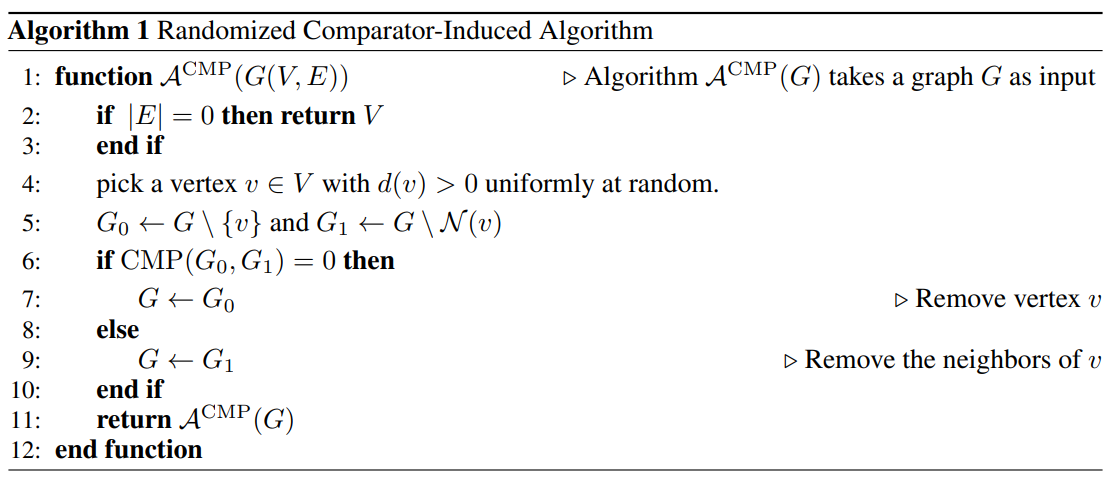

We employ graph comparing functions to analyze graph structures. These functions are designed to compare different graphs, helping to determine which of the graphs has a larger estimated MIS. By combining the tree search of dynamic programming with these comparator function, independent sets can be generated. We refer to this as the induced algorithm of a comparator function, and it is depicted below:

Comparators through Graph Neural Networks

The core of our approach involves developing comparators using GNNs. These comparators are trained to recognize patterns and structures within graphs that are indicative of optimal independent sets. GNNs excel in this task due to their ability to capture the relational information between nodes and edges in a graph, a crucial aspect for solving the MIS problem.

This figure illustrates the architecture of our model. An input graph is passed into the model with zero node embeddings. These nodes are processed through a Graph Embedding Module (GEM) for K iterations, resulting in the final node embeddings. These embeddings are then averaged to obtain a graph embedding , which is put through multiple fully-connected layers to obtain a final logit value for the input graph. When comparing multiple graphs, a larger estimated MIS means a larger logit value.

Inference Algorithm

After training, the GNN-based comparators are used in an inference algorithm to compare between subgraphs of possible branches in the DP tree. Once a terminal state is reached, a feasible independent set in created.

A notable aspect of our methodology is the implementation of self-supervised training using the consistency property of graph comparing functions.

Consistent Graph Comparing Functions

Consistency is a property of graph comparing functions that ensures that the algorithm's performance is stable and reliable. We prove that when a comparator is consistent, its induces algorithm is optimal.

Training a Consistent Comparator

Training a consistent comparator involves a self-supervised learning approach, where the model is trained using a dataset with labels that are generated by its own estimates. This method is particularly advantageous as it reduces the dependency on labeled datasets, which are often scarce in NP-Hard problems. The figure below illustrates the 3-step process of generating a dataset, which is done multiple times during the course of training.

We conducted several experiments to evaluate the efficacy of our proposed methods. Here are the key findings:

@inproceedings{brusca2023maximum,

title={Maximum Independent Set: Self-Training through Dynamic Programming},

author={Brusca, Lorenzo and Quaedvlieg, Lars CPM and Skoulakis, Stratis and Chrysos, Grigorios G and Cevher, Volkan},

booktitle={Advances in neural information processing systems (NeurIPS)},

year={2023}

}This work was supported by the Swiss National Science Foundation (SNSF) under grant number 200021_205011, by Hasler Foundation Program: Hasler Responsible AI (project number 21043) and Innovation project supported by Innosuisse (contract agreement 100.960 IP-ICT). We further thank Elias Abad Rocamora and Nikolaos Karalias for the useful feedback on the paper draft as well as Planny for the helpful discussions.